Overview on ELK Stack on Debian deployment

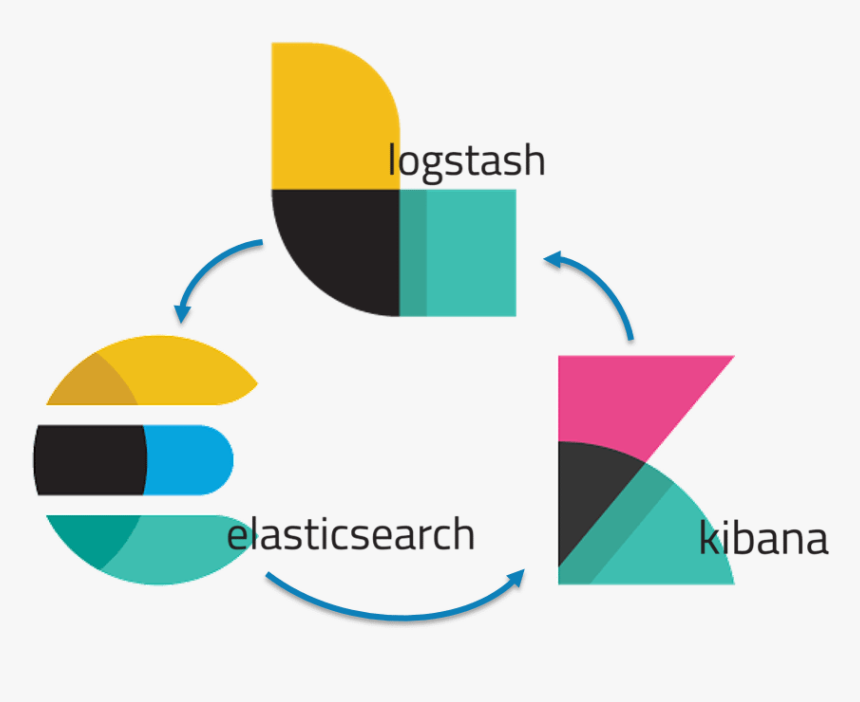

The ELK Stack on Debian is a powerful solution for centralized logging and real-time data analysis. It consists of three open-source tools:

- Elasticsearch: A search and analytics engine.

- Logstash: A data processing pipeline for collecting, filtering, and forwarding data.

- Kibana: A visualization tool for exploring and analyzing data stored in Elasticsearch.

This guide will walk you through the step-by-step process of deploying and configuring the ELK Stack on a Debian server. It also includes best practices, troubleshooting tips, and integration ideas.

Prerequisites

Before starting, ensure the following requirements are met:

- A Debian server with at least 4 GB RAM and 2 CPUs.

- Root or sudo privileges for installation.

- Internet connectivity for downloading required packages.

- Official Elastic Documentation

- Learn more about aggregation

Step 1: Setting Up Elasticsearch on Node 1 and Node 2

Elasticsearch will be installed on both Node 1 and Node 2 to form a resilient cluster.

1.1 Install Elasticsearch on Both Nodes

1. Install Java (Elasticsearch requires Java):

sudo apt update

sudo apt install openjdk-11-jdk -y2. Add the Elasticsearch Repository:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

sudo sh -c 'echo "deb https://artifacts.elastic.co/packages/8.x/apt stable main" > /etc/apt/sources.list.d/elastic-8.x.list'3. Install Elasticsearch:

sudo apt update

sudo apt install elasticsearch -y1.2 Configure Elasticsearch Cluster Settings

On both Node 1 and Node 2:

1. Edit the Elasticsearch configuration file:

sudo nano /etc/elasticsearch/elasticsearch.yml2. Update the configuration with the following (replace

cluster.name: my-elk-cluster

node.name: node-1 # Use "node-2" on Node 2

network.host: 0.0.0.0

discovery.seed_hosts: ["" , "" ]

cluster.initial_master_nodes: ["node-1", "node-2"]3. Start and Enable Elasticsearch on both nodes:

sudo systemctl enable elasticsearch

sudo systemctl start elasticsearch4. Verify Cluster Health: Use the following command on either node to check the status:

curl -X GET ":9200/_cluster/health?pretty" The response should show both nodes and indicate a green or yellow cluster status.

Step 2: Setting Up Logstash on Node 3

Logstash is used to aggregate and process logs from various sources before sending them to Elasticsearch.

2.1 Install Logstash

1. Install Logstash:

sudo apt update

sudo apt install logstash -y2.2 Configure Logstash to Forward Logs to Elasticsearch

1. Create a Logstash configuration file:

sudo nano /etc/logstash/conf.d/logstash.conf2. Add the following configuration to collect and forward log data to Elasticsearch:

input {

file {

path => "/var/log/syslog"

start_position => "beginning"

}

}

filter {

# Example of filtering syslog events

if [path] =~ "syslog" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:timestamp} %{SYSLOGHOST:hostname} %{DATA:program} %{GREEDYDATA:log}" }

}

date {

match => [ "timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

output {

elasticsearch {

hosts => ["http://:9200", "http://:9200"]

index => "logs-%{+YYYY.MM.dd}"

}

stdout { codec => rubydebug }

}3. Test the Configuration:

sudo -u logstash /usr/share/logstash/bin/logstash --path.settings /etc/logstash -f /etc/logstash/conf.d/logstash.conf --config.test_and_exit4. Start and Enable Logstash:

sudo systemctl enable logstash

sudo systemctl start logstashStep 3: Setting Up Kibana on Node 3

Kibana is a visualization tool that connects to Elasticsearch and lets you create visual dashboards.

3.1 Install Kibana

1. Install Kibana:

sudo apt update

sudo apt install kibana -y3.2 Configure Kibana to Connect to Elasticsearch

1. Edit the Kibana configuration file:

sudo nano /etc/kibana/kibana.yml2. Update the file with the following settings:

server.host: "0.0.0.0"

elasticsearch.hosts: ["http://:9200" , "http://:9200" ]3. Start and Enable Kibana:

sudo systemctl enable kibana

sudo systemctl start kibana3.3 Access Kibana

- Open a web browser and go to

http://.:5601 - You should see the Kibana dashboard. From here, you can configure and visualize log data.

Step 4: Sending Logs to Logstash

To gather logs from various servers, configure each server to forward logs to Logstash.

4.1 Install Filebeat on Client Servers

Filebeat is an Elastic tool designed to send logs to Logstash or Elasticsearch.

1. Install Filebeat:

sudo apt update

sudo apt install filebeat -y2. Configure Filebeat to Send Logs to Logstash:

sudo nano /etc/filebeat/filebeat.yml3. Update the Filebeat configuration:

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/syslog

output.logstash:

hosts: [":5044" ]4. Start and Enable Filebeat:

sudo systemctl enable filebeat

sudo systemctl start filebeat5. Verify Logs in Kibana: After a few minutes, you should see logs from Filebeat in the Kibana dashboard.

Step 5: Configuring Logstash to Receive Logs from Filebeat

Since Filebeat sends logs on port 5044 by default, we need to configure Logstash to receive them.

1. Edit the Logstash Configuration File:

sudo nano /etc/logstash/conf.d/logstash.conf2. Add the Filebeat input:

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["http://:9200", "http://:9200"]

index => "filebeat-%{+YYYY.MM.dd}"

}

}3. Restart Logstash:

sudo systemctl restart logstash- Check Kibana: Logs should now appear in Kibana under the

filebeat-*index.

Step 6: Setting Up Visualizations and Dashboards in Kibana

Now that logs are flowing into Elasticsearch, you can create custom visualizations and dashboards in Kibana to monitor your environment.

- Create an Index Pattern:

- Go to Management > Stack Management > Index Patterns in Kibana.

- Create an index pattern for filebeat-* or logs-* depending on your setup.

- Visualize Data:

- Go to Visualize in Kibana and create new charts, graphs, and tables using the indexed log data.

- Create Dashboards:

- Group multiple visualizations into a single Dashboard for an overview of your aggregated data.

Conclusion on ELK Stack on Debian

You’ve now set up an aggregation cluster using the ELK Stack on Debian 12. This cluster ingests and processes logs from multiple sources, aggregates them through Logstash, stores them in Elasticsearch, and visualizes them using Kibana. This setup is highly scalable and can be extended to gather data from more servers or applications, providing a powerful solution for centralized logging and analysis.